Is the race to AI sustainable?

As we enter 2025, new announcements about the latest advances in AI are everywhere in the tech landscape. AI is a veritable groundswell that will revolutionise the way we live our daily lives in the future. However, this race to AI comes at the price of an ever-increasing impact on our environment, and requires investment that seems increasingly excessive.

Against this backdrop, it’s fair to ask what’s next. Where are we heading? Towards ever bigger and more powerful models, or are we moving towards more specialised, optimised investment and development that is more respectful of our environment?

Generative AI: the mad rush to AGI!

After the major advances seen in 2024, with the release of the latest versions of the LLM models from OpenAI, Anthropic, Google, Meta and Mistral, 2025 also seems to be full of promises and fantasies about the arrival of the long-awaited AGI!

AGI or General AI refers to AI systems capable of matching or surpassing human capabilities in a variety of cognitive tasks. Unlike current AI, which excels at specific tasks, AGI will be able to learn and solve problems in a variety of areas without human intervention.

Its generalist capabilities will enable it to address virtually any field, including science, the arts, research, process automation and optimisation, the execution of complex tasks, etc.

To achieve ever-higher performance, this race requires more CPU capacity and more data with each new version, which means ever-greater energy consumption and investment.

This quest for general AI is leading to the development of ever larger models, which are consequently consuming more and more resources. Indeed, the growth in resources needed to train each new generation of model is exponential.

2024-2025: the great AI investment boom

Firstly, in financial terms, the figures are impressive. In 2024, companies specialising in generative AI alone raised $56 billion. Microsoft plans to spend $80 billion in 2025 on data centres for artificial intelligence training and inference.

At Meta, generative AI technology has become the top priority. Its CEO Marc Zuckerberg has announced the launch of an advanced AI model, Llama 4, with more than 100,000 Nvidia H100 GPUs. As a result, Meta plans to spend up to $40 billion in 2024 on infrastructure, reflecting a 42% increase on 2023.

As part of the Stargate project, Donald Trump has announced an investment of $500 billion over the next four years for the development of AI infrastructure in the United States.

Europe is not to be outdone! Emmanuel Macron has responded to Donald Trump’s announcement of the Stargate project with a massive €109 billion investment plan to develop AI in France.

A large part of this €109 billion will come from the United Arab Emirates, with funding of between €30 and €50 billion. The plan includes a strategic agreement with the United Arab Emirates to build the largest AI campus in Europe and the construction of a 1 GW datacentre dedicated to AI in France, equivalent to the power of a nuclear reactor.

Increasing energy consumption

Secondly, in terms of energy. Zuckerberg has announced a 2GW cluster for 2025, and Microsoft and OpenAI have launched the Stargate project to create several clusters of between 2 and 5GW. By way of comparison, the core of a nuclear power station produces around 1 GW.

Sam Altman, CEO of OpenAI, is advocating a race to the top for AI data centres. He plans to build 5 to 7 data centres of 5 Gigawatts each, equivalent to the energy consumption of 3 million homes.

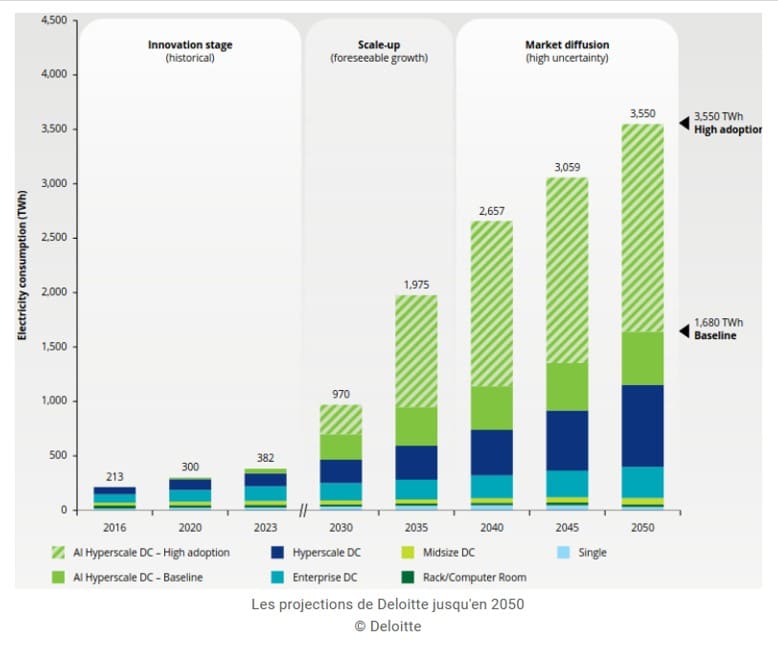

Data centres require colossal amounts of energy. According to a study by Deloitte, at the current rate of adoption, power consumption in data centres, under the pressure of AI, could increase ninefold by 2050.

Gartner predicts that 40% of data centres will be threatened by shortages by 2027.

This could jeopardise the development ambitions of AI, since the growth of our energy infrastructures is much slower than that of the AI market.

Generative AI: the need for ever more data

Finally, in terms of data. Training these models requires gigantic quantities of data. For example, all the public data on the internet has already been used to train ChatGPT 4. There’s no point in growing AIs if we don’t have enough to feed them.

New data generated by the AIs themselves will be used to train future models.

Generative AI: growing difficulties in developing new models

As a result, there has been a certain delay and a number of difficulties in developing new generations of models. For example, OpenAI is struggling to release version 5 of ChatGPT, which was initially announced for the end of 2024.

According to a report by The Information, the quality gains achieved with the new version are much more modest than those observed during the transition between GPT-3 and GPT-4. The slowdown in performance improvements could be explained by the increasing difficulty of finding high-quality training data.

As a result, chatGPT 4.5, released in March 2025, is a disappointment. Although this version offers some interesting improvements, it remains in the same vein as previous versions and does not offer any genuinely new functions.

OpenAI has clearly focused on emotional intelligence rather than pure analytical power. A more ‘human’ AI: many testers note that this version is more fluid, more natural, and less robotic in its exchanges. However, while everything is ‘a little better’, it is far from a radical transformation.

Generative AI: a major impact on the environment

How can the generative AI sector continue to grow at such a breakneck pace? As we enter 2025, investment in generative AI seems to be defying all expectations.

In a world where climate change is being felt more and more everywhere, AI continues to develop at an unsustainable pace, with ever greater environmental impacts! Impact on CO2 pollution, but also on water.

The calculations needed to drive and run the models require a huge number of servers, which in turn generate heat. So a huge amount of water is needed to cool the machine rooms. In the United States, for example, data centres are often located in desert areas such as Arizona and Iowa, which exacerbates the water stress already present.

With the growing demand for AI, the development of data centres has increased 7-fold in two years in Los Angeles, also exacerbating the pressure on water resources in a region already affected by prolonged drought.

On a global scale, data centres hosting AI are expected to consume six times more water than Denmark. By 2027, AI could use up to 6.6 billion cubic metres of water.

Generative AI: development directions for the coming years

There will be several coexisting approaches to the development of artificial intelligence:

The first, which is currently being adopted by the leaders and OpenAI in particular, is to continue the race for power to develop general-purpose AI based on ever more resources, with the impacts we mentioned earlier.

The second is to move towards more frugal AI, i.e. AI that uses fewer resources for its training and operation and has a more reasonable impact on the environment.

Ever more resources for super-powered general-purpose AI

Clearly, the AI race is not going to stop. AI is tomorrow’s economic and political weapon, and all the major powers, led by the United States and China, are engaged in a frantic race to lead the field.

There will indeed be an AI war to dominate the market. This is the clearly stated strategy of OpenAI, which despite an abysmal loss of 5 billion dollars in 2024 is continuing to raise massive amounts of money and invest.

In his speech at the start of the year, Sam Altman, OpenAI’s CEO, announced that from now on their objective will no longer be to build a General AI, but a super intelligence that exceeds human capabilities. The main objective is to drastically accelerate scientific research by making new discoveries.

On the American side, the race for power continues. In the words of Sam Altman himself, the new version 4.5 of chatGPT requires a gigantic infrastructure: ‘bad news: it is a giant, expensive model’.

The same goes for Elon Musk, who has set up a cluster of 200,000 GPUs, at breakneck speed, to train his Grok 3 model. This new AI was presented at the beginning of 2025 to compete with chatGPT 4. According to Elon Musk, this model has been trained with ten times more computing power than its predecessor.

Its supercomputer, Colossus, is billed as one of the most powerful AI clusters in the world:

- Colossus, xAI’s flagship, is a training cluster made up of 100,000 Nvidia H100 GPUs.

- Colossus is set to double in size over the coming months

- A further 100,000 GPUs will be added, including the long-awaited H200s

Towards more frugal AI

Given the current challenges in terms of financial investment, energy and data, it is likely that the race to drive ever-larger general-purpose models cannot continue at the current pace.

Against this backdrop, a number of developments are focusing on the optimisation of large models and the development of smaller, more specialised models.

The cooperation of smaller, highly specialised systems within a larger, generalist system is one way of building a super intelligence. This is what we are seeing with the move to multimodality and the tendency for large models to subdivide into smaller models, coordinating to meet demands.

The aim is to do better with fewer resources. There is no doubt that innovation in this area will lead to major advances.

DeepSeek, the Chinese AI that outperforms ChatGPT

A first step in this direction has been taken by DeepSeek, the Chinese model, which has announced performance comparable to ChatGPT 4o, albeit with far fewer resources.

While the American AI giants are engaged in a frantic race for computational resources, DeepSeek, the small Chinese AI, is demonstrating that we can do just as well with far fewer resources!

Deprived of the latest Nvidia chips by Western restrictions, DeepSeek’s engineers have had to rethink the very architecture of their model. DeepSeek’s secret? A hybrid, optimised approach based on two pillars: hardware and algorithms. A winning bet that demonstrates that raw power is not everything.

This approach has enabled a drastic reduction in training costs, energy consumption and computing power requirements. The result? A faster, more efficient model that requires less computing power and therefore consumes less energy.

Mistral AI, the French AI that rivals the best

Founded in 2023 by Arthur Mensch, a 32-year-old engineer, the start-up has achieved a valuation of over €6 billion in just two years. With a record fund-raising round of €600 million, Mistral is demonstrating that it is possible to compete with the American giants using open-source models and much more modest resources.

Rather than aiming for maximum model size, its engineers have optimised operational efficiency. On 30 January 2025, Mistral announced a new, ultra-optimised model, Mistral Small 3, with results comparable to those of larger models.

Thanks to its optimised architecture and reduced number of layers, Mistral Small 3 rivals systems up to three times larger, such as Llama 3.3 70B or Qwen 32B.

With the AI summit in Paris, the French AI company, Mistral AI, was in the spotlight and made the news in particular with the announcement of a number of partnerships. An agreement with AFP to exploit the news agency’s information, contracts with BNP Paribas, Veolia, Stellantis, France Travail, Outscale, etc.

Mistal AI has also announced a partnership with NVIDIA challenger Cerebras Systems, which provides the infrastructure used to run the model. According to Mistral, thanks to this infrastructure, its ‘Le chat’ application can answer users’ questions at a rate of 1,000 words per second.

The French start-up is gradually establishing itself as a pillar of artificial intelligence in Europe. In short, Mistral is becoming a heavyweight. The competition is far from over, as we saw recently with DeepSeek. When we look at Mistral, we have never had a French player so far ahead of the tech market, with excellent performance and resources that bear no comparison with the big names in AI.

Is frugal AI a losing battle?

Frugal AI is a necessity rather than a choice for many players such as Europe and China. Indeed, the resources available there are much more modest than those available to the American tech giants.

It also opens the way to a reconsideration of the maximisation of the size of language models for ever more powerful AI. Frugal AI ‘would offer threefold environmental, economic and sovereignty benefits, and would enable French and European start-ups to improve their competitiveness’, stresses the French Agency for Ecological Transition.

Frugal AI therefore seems to be a path that is set to develop, particularly in Europe, given that the current model of the major general-purpose engines seems unsustainable from every point of view.

Frugal AI, a dangerous oxymoron

Frugal AI embodies a framework for the principles of more frugal AI. The ambition: to counter AI’s galloping environmental footprint. However, even so-called frugal AI is still a very resource-hungry technology.

In this respect, frugal AI can be seen as a dangerous oxymoron designed to ease our conscience. Everyone knows that generative AI will have a major impact on the global environment, not least because of the huge data centres required to run it.

However, as AI is seen as a strategic issue for technological sovereignty, everyone is investing massively in these technologies. In this context, it is not certain that the concept of frugality will find sufficient resonance to influence the trajectory taken by AI in our society and its environmental impact.